Deployment

Register models¶

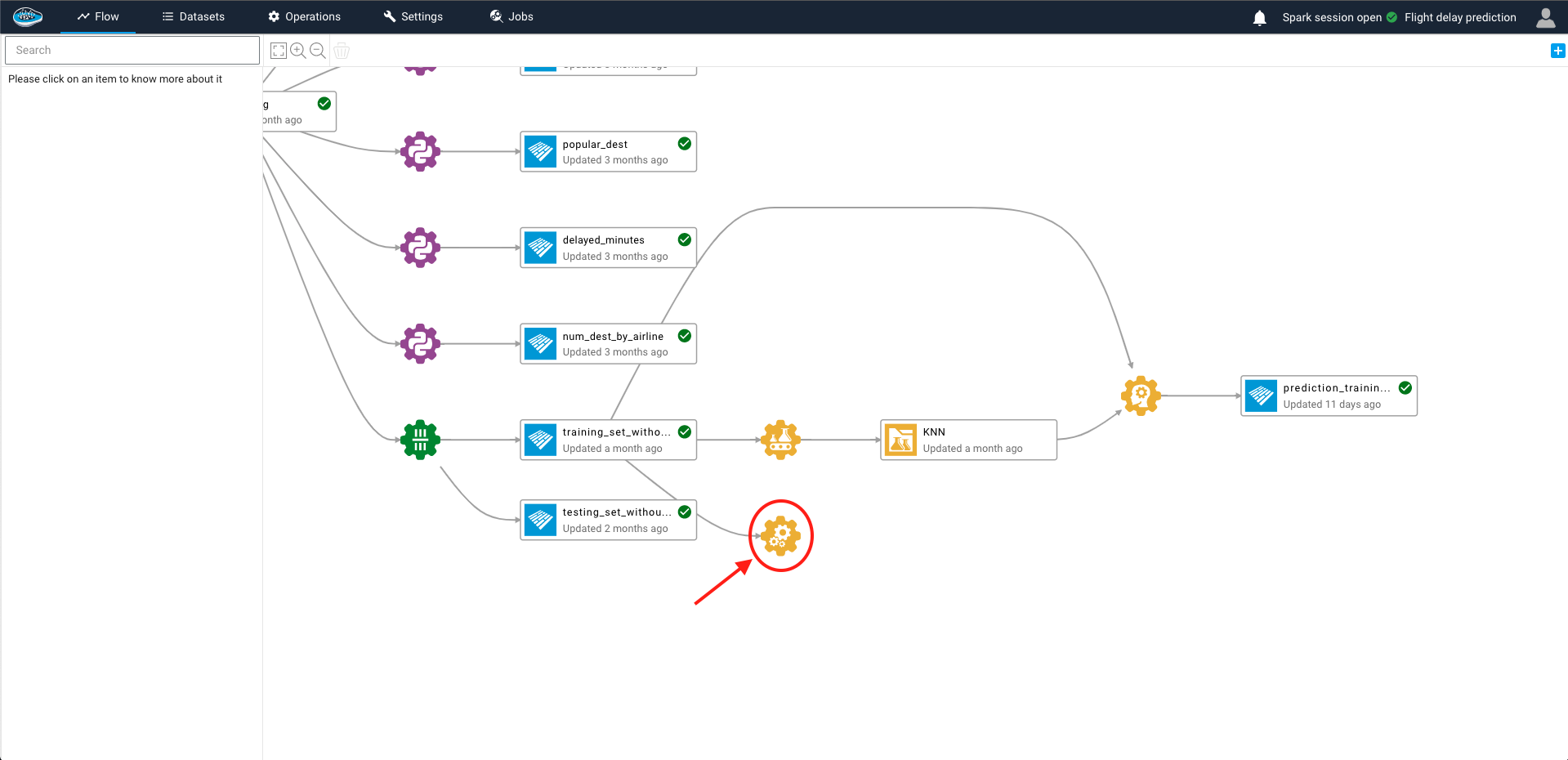

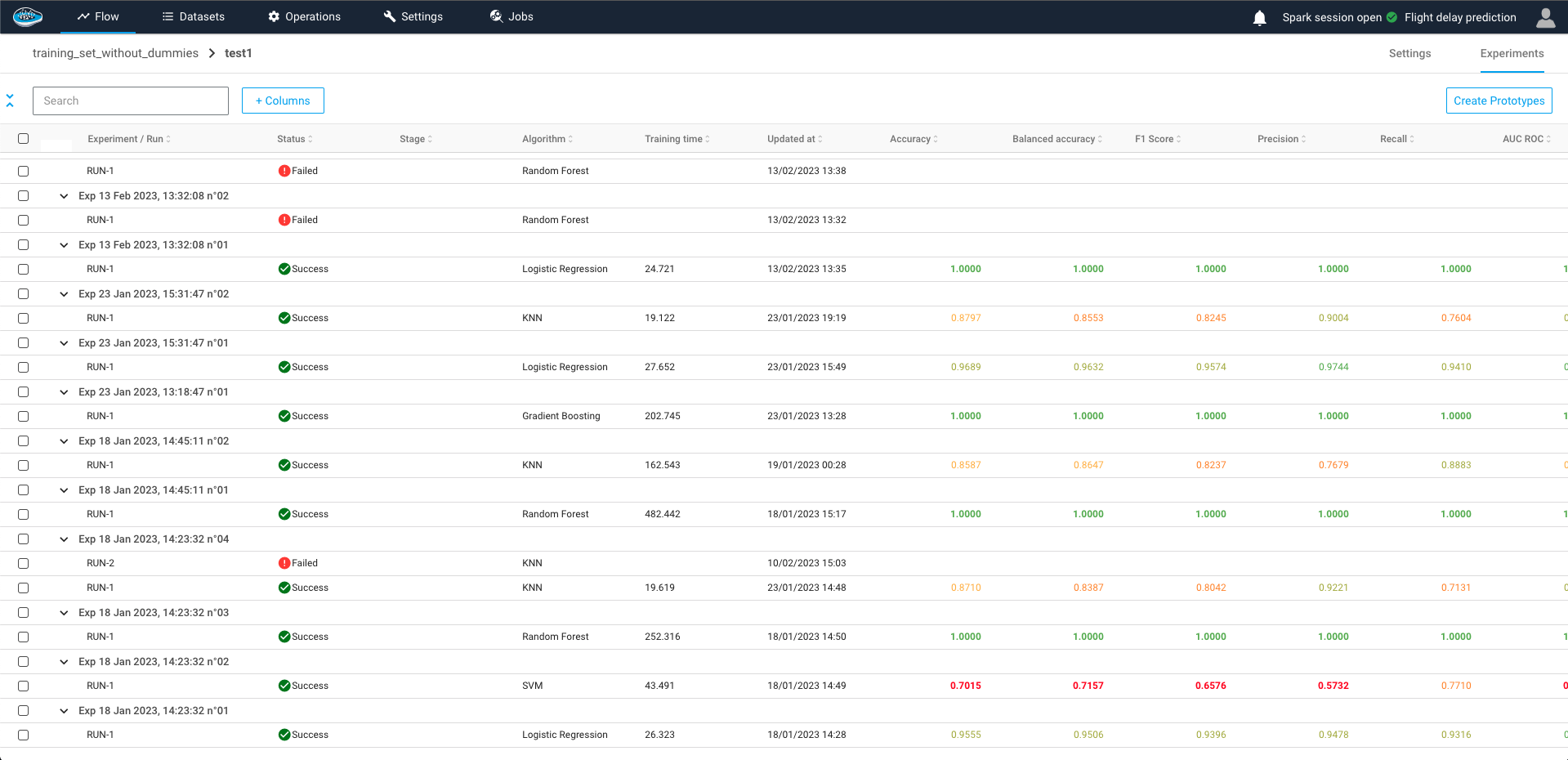

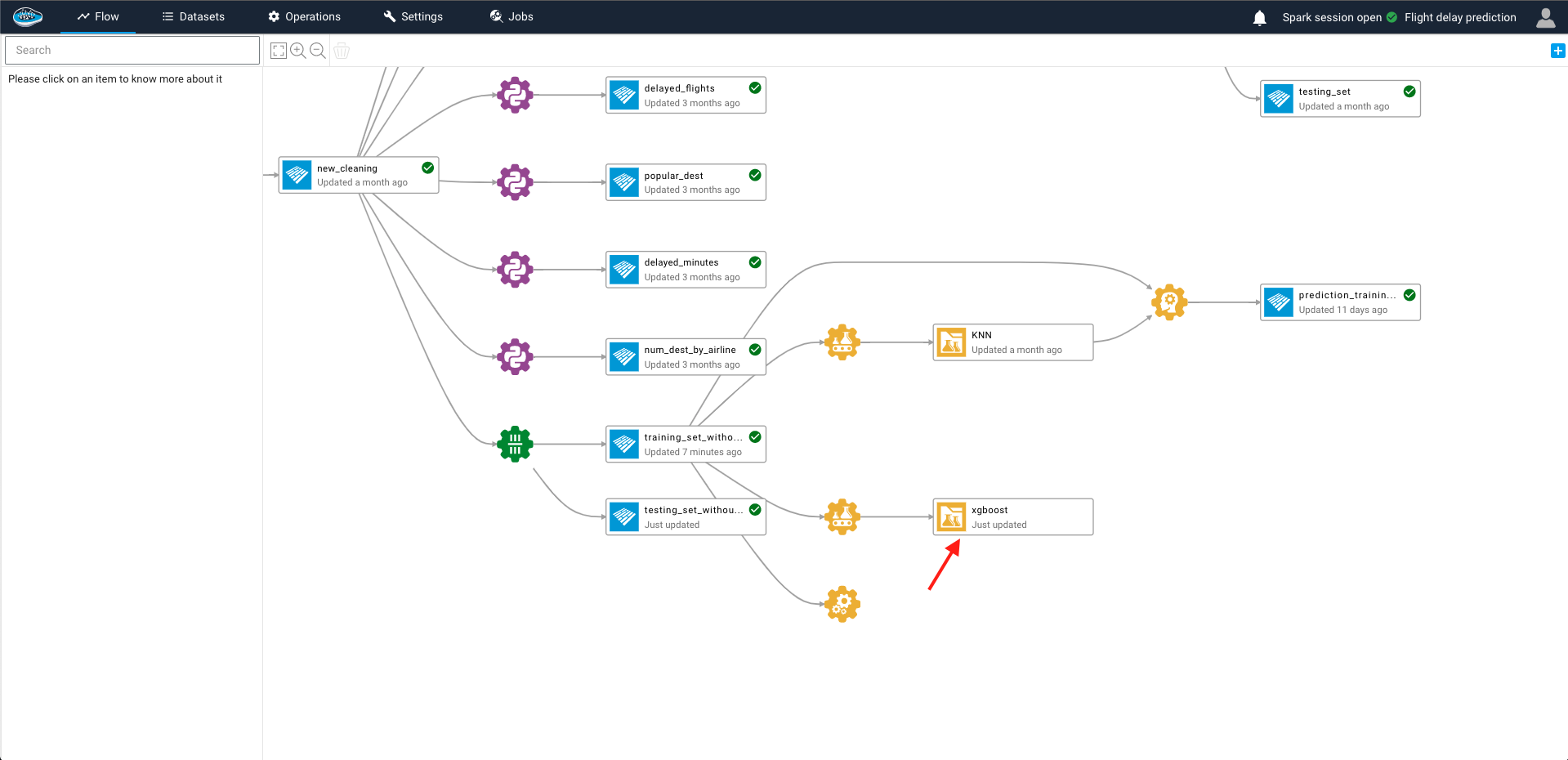

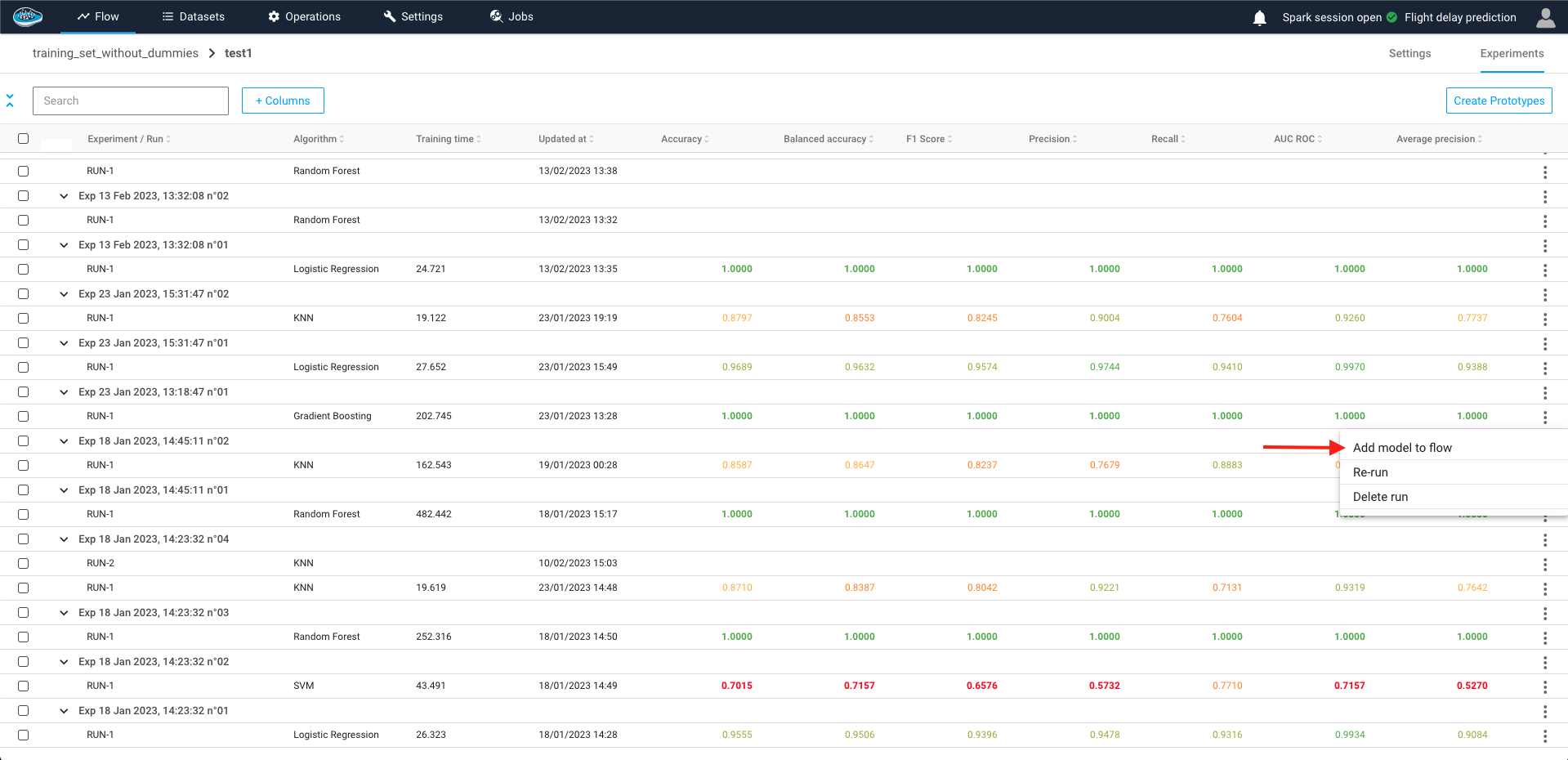

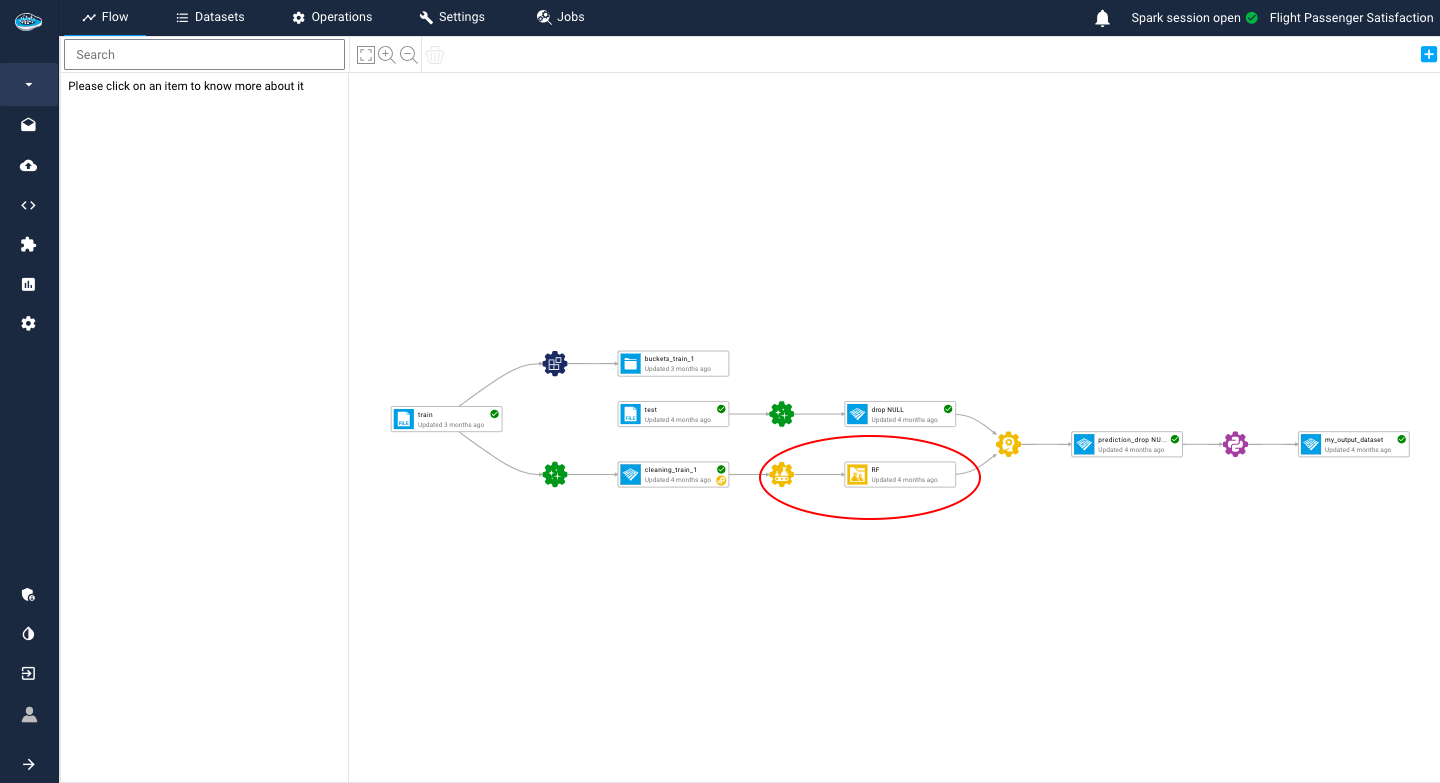

To deploy models developed with papAI, first promote the model to the Model Registry and subsequently initiate the deployment process from the registry itself.

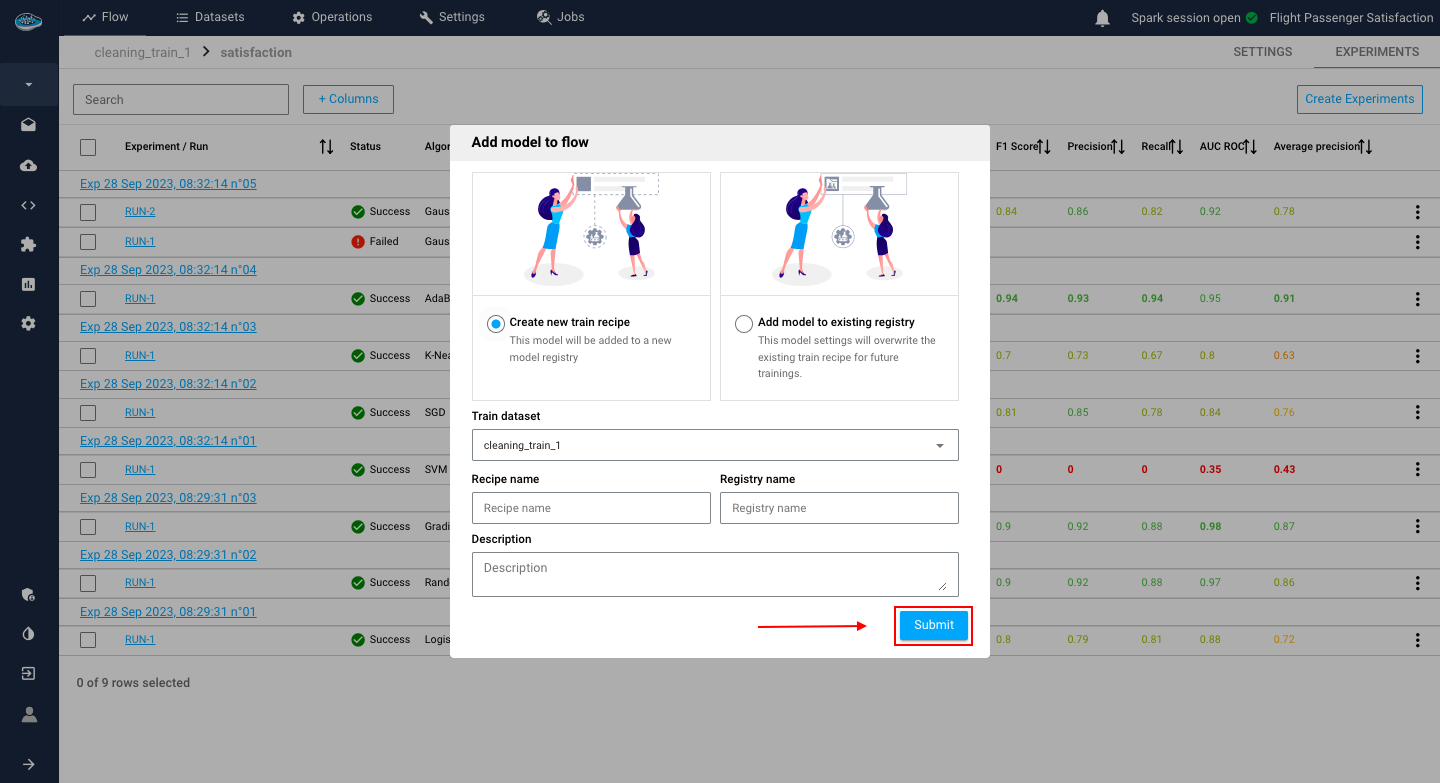

Once you select Add to Flow, a new window will appear that will enable you to create a model registry containing your chosen model, or add the model to an existing registry. This window will allow you to enter the necessary settings, including the name and description of the new model registry.

Settings¶

General Settings¶

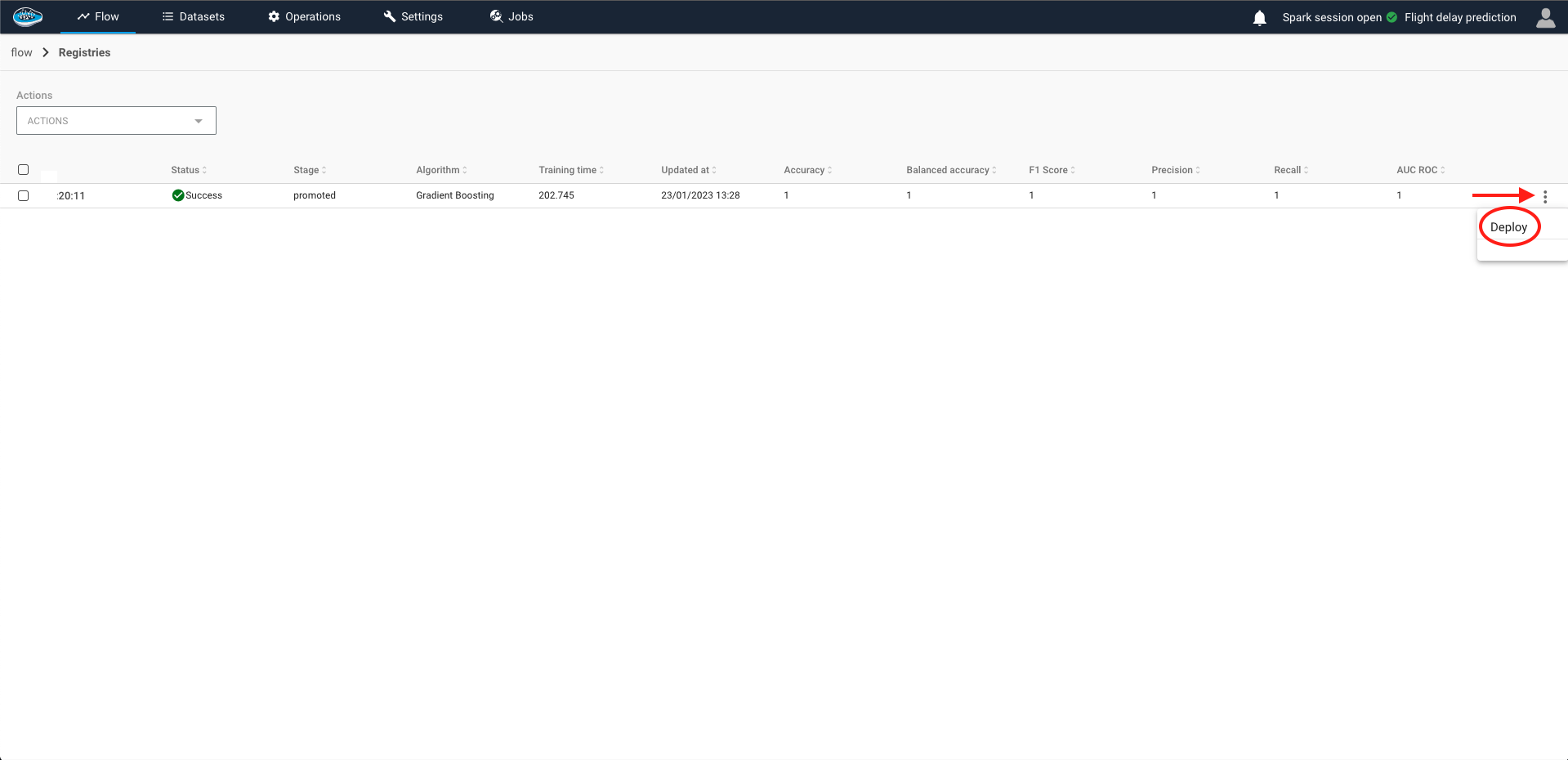

To initiate the deployment process, follow these steps:

- Click on the Deploy button.

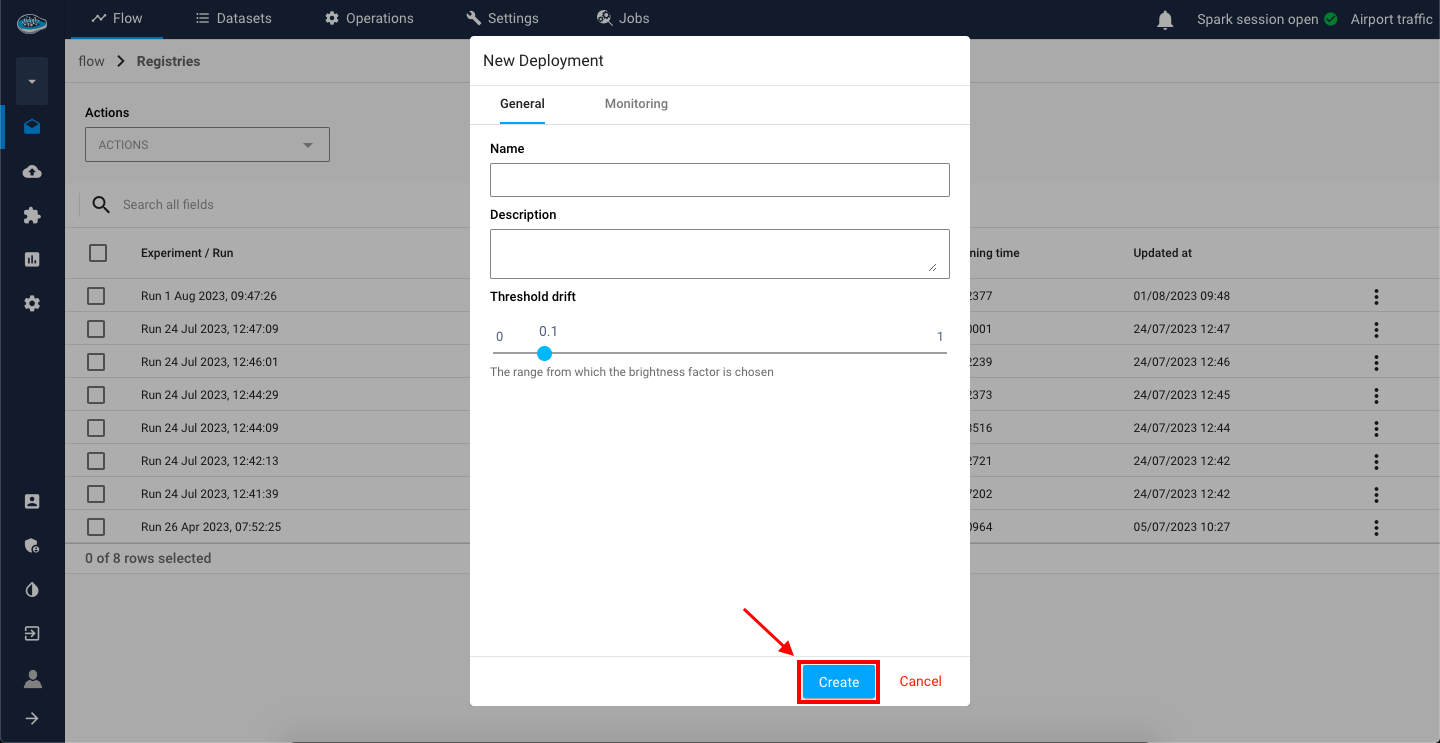

- In the displayed dialog, provide the required details, including:

- Name: Enter a name for the service.

- Description: Enter a description for the deployed model.

- Threshold drift: Enter the

thresholdparameter.

- Click the Create button to finalize the deployment.

Info

The threshold parameter is a user-defined value that is compared to the p_val statistical measure used to assess the significance of the Kolmogorov-Smirnov (KS) test for drift detection. By adjusting the threshold value, users can customize the sensitivity of the drift detection algorithm according to their specific requirements. A lower threshold value indicates higher sensitivity to drift detection, while a higher threshold value indicates lower sensitivity.

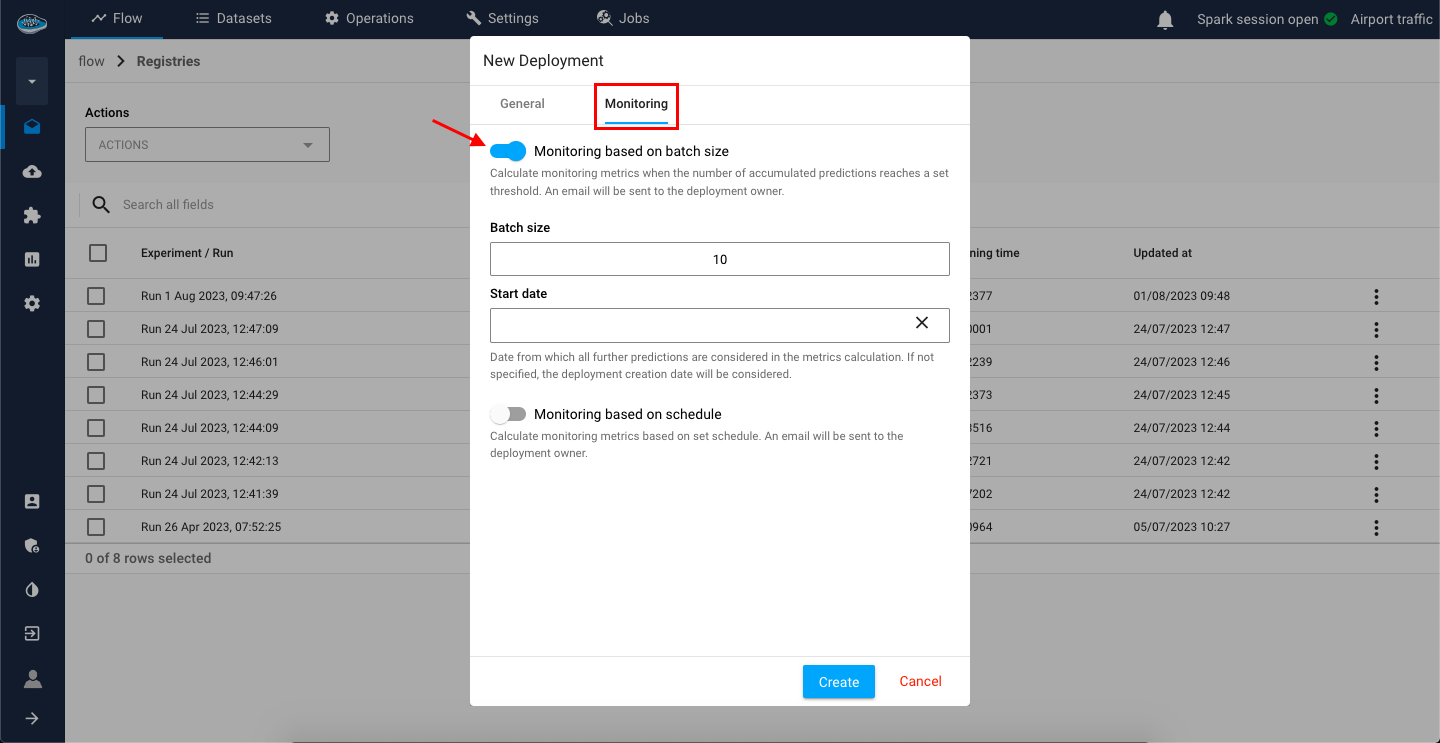

Monitoring Settings¶

In the event that you do not wish to engage in manual monitoring, the platform provides you with two alternative methods to monitor your various predictions and generate data drift within a pre-selected threshold.

Monitoring based on batch size¶

The first option provides the chance to track data drift by determining it once a specific number of predictions hit a predetermined limit, known as the batch size. The batch size is considered from the deployment's creation datetime, but a start datetime can be established if required.

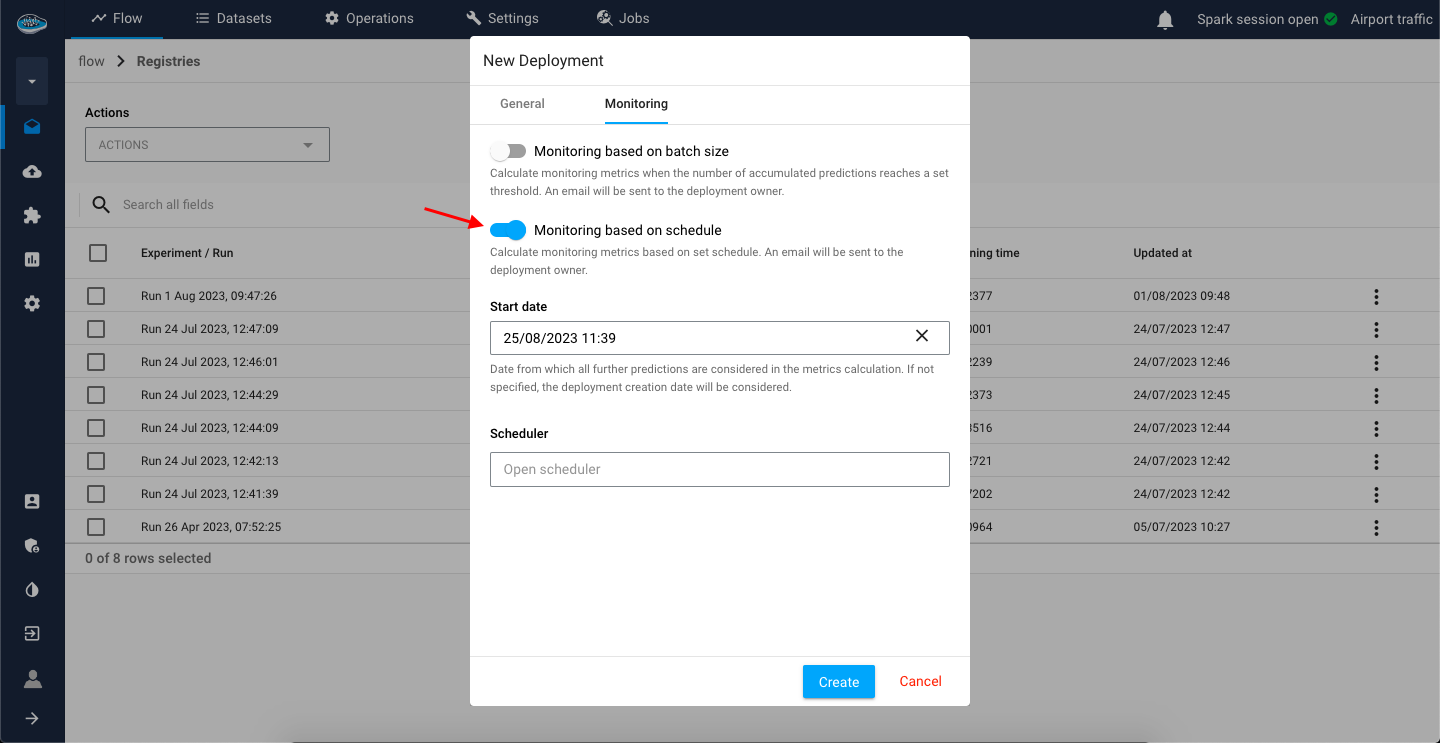

Monitoring based by schedule¶

The second option allows you to track data drift by establishing a recurring schedule from a specified start date. After each scheduled interval, the data drift is automatically computed.

In any case, if the data drift reaches a value that surpasses the selected drift threshold, an alert will be automatically dispatched to the deployment owner's email. This is to enable them to evaluate the circumstances and refresh the model predictions.

Tip

You can select both monitoring options if necessary.

Editing the monitoring settings

When your deployment created, you can also edit the monitoring settings by accessing the deployed model from the dashboard and change the desired settings.

Here is a video showing the process on papAI :

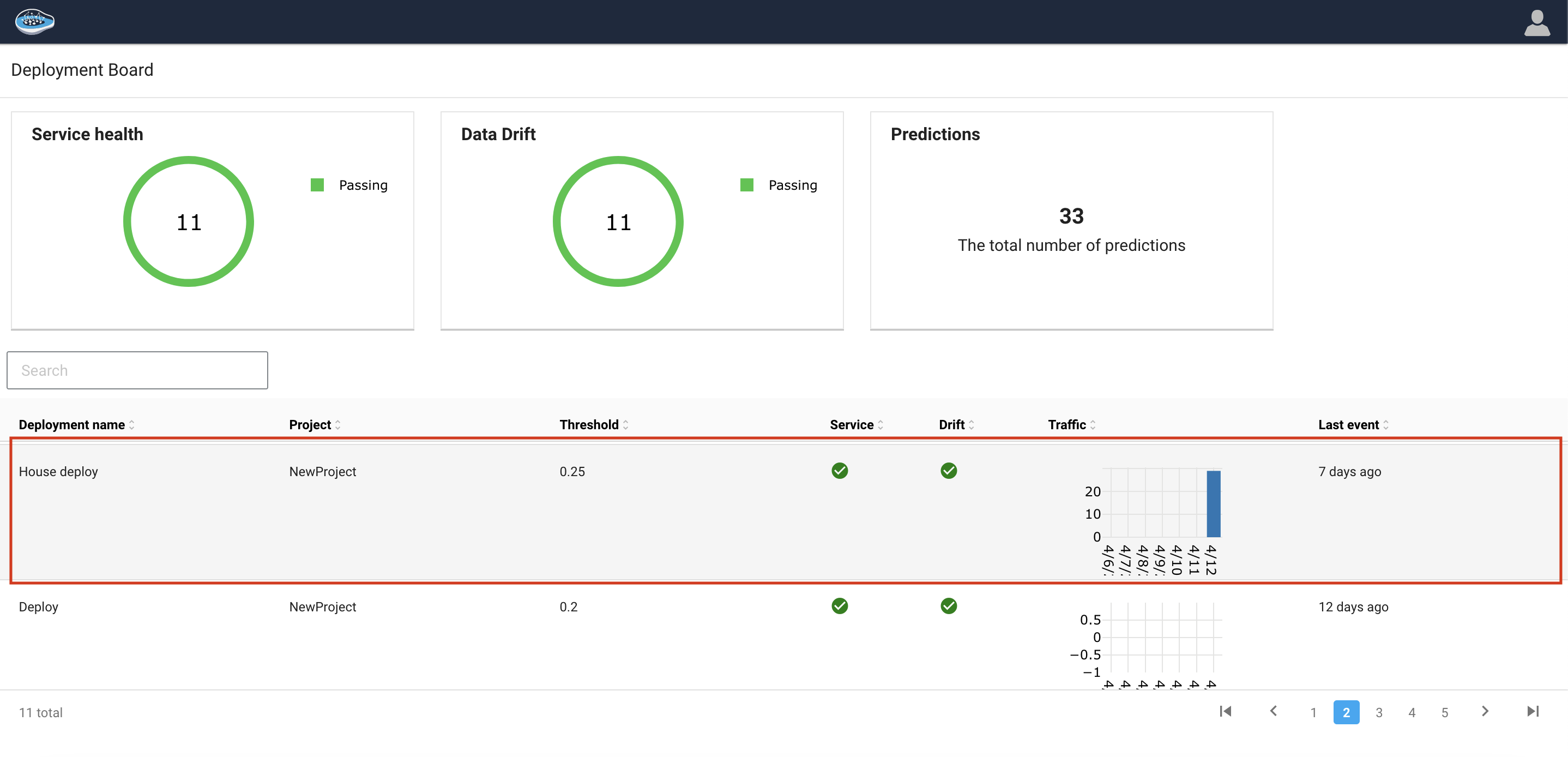

Deployment dashboard¶

After deploying models, the deployment dashboard serves as a pivotal point for managing deployment activities, enabling seamless coordination among all stakeholders involved in the model operationalization process. The inventory provides a single interface to actively deployed models, allowing for performance monitoring and facilitating the implementation of necessary actions.