Python and/or Spark SQL recipes¶

Data can be imported in many forms just as mentioned before but you can also create your own dataset by coding it.

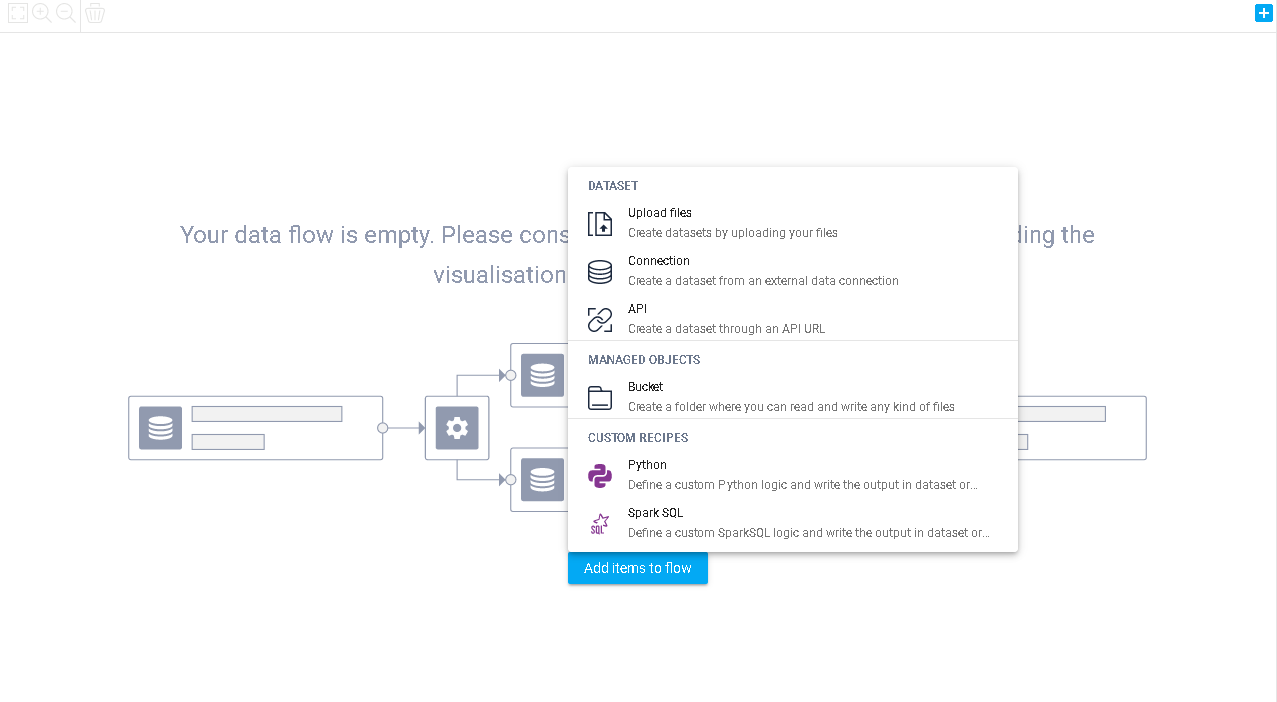

Thanks to our integrated Python and Spark SQL scripts, you can simply code and create your own dataset to be exported onto your project's flow. Simply click the same Import a new dataset button or the little icon on the top right corner and select your preferred programming language, either Spark SQL or Python (other languages will be added in the future stay tuned...), and the programming interface will appear onto the screen.

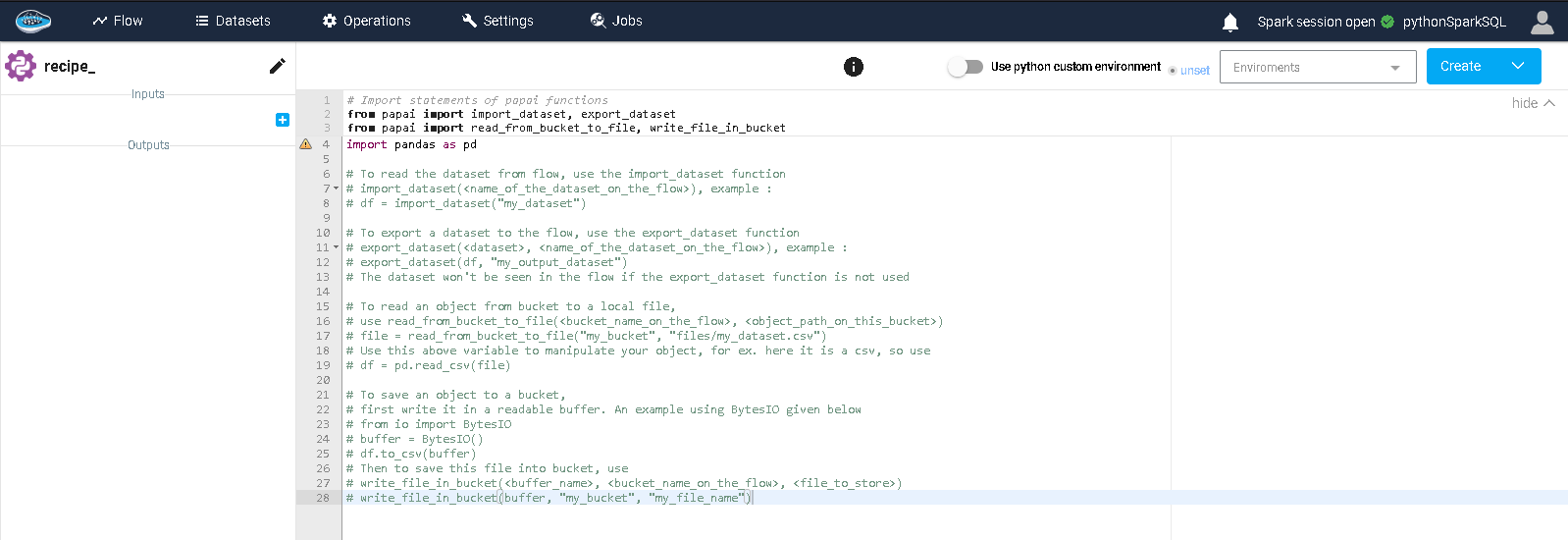

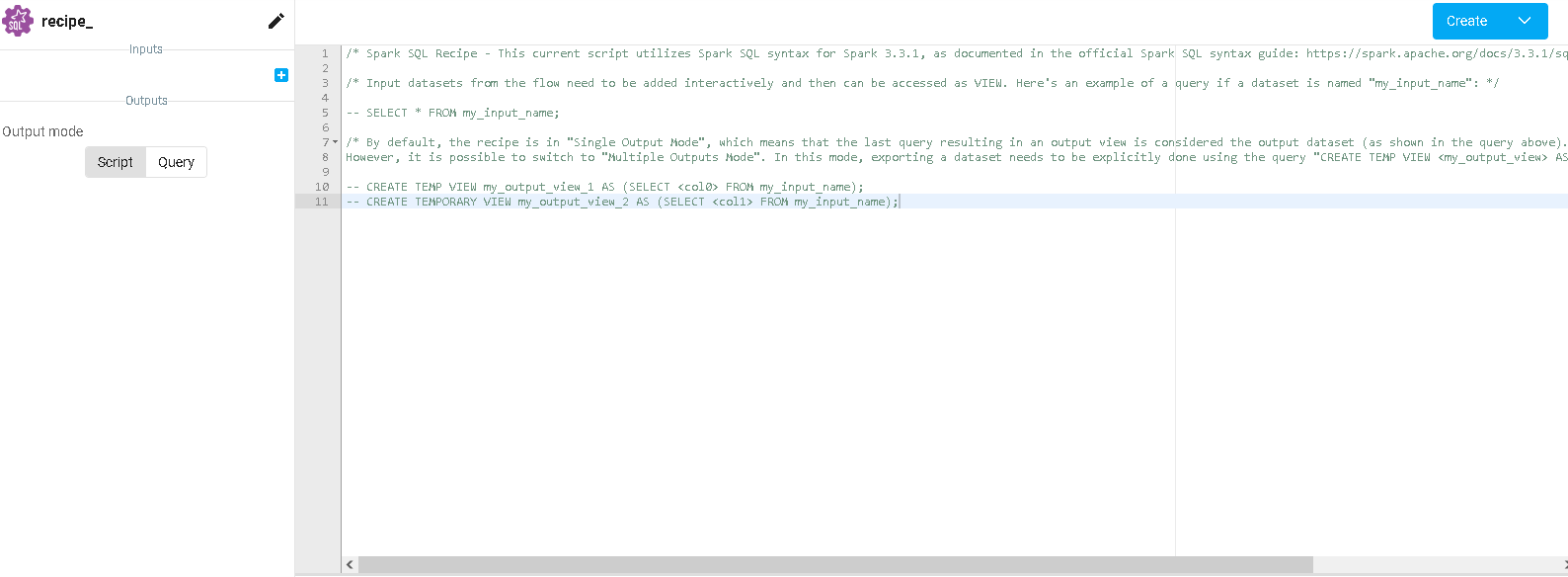

In this interface, you will find a left sidebar with the data inputs and outputs (in this case, you only have outputs). By default, there is only one output but you can add others if needed and you can modify their names. On the right hand side, you have the editor to type in your code. Some lines of codes and some instructions are added by default to help you get started into writing your script correctly.

When you finish writing your script, you just click the blue top right button, saying Create and Run, and the script will start running and its status will be displayed in the console located in the bottom part of the page. It will either inform you if the run is successful or it encountered some issues related to your script. When your script is running, the output datasets will appear in your project's flow linked to your newly created Python or Spark SQL script. You can modify the script at your convenience and re-run it with the Save and Run button.

Tip

Don't forget to save your modifications made on your script with the Save and Run button, located on the top right corner screen or else all your progress will be lost and the output dataset won't be refreshed as expected.

Did you know ?

Python script also includes these essential libraries to allow you a great liberty of coding such as : Pandas, NumPy, scikit-learn, lightgbm, category_encoders, xgboost, skorch, torch, lime, shap, requests, psycopg2-binary, sqlalchemy

Note

In case you need other libraries for your script, check this page on virtual environments

Info

If you want to use a script with input datasets, check this page